The world is once more opening up for travel. As passenger volumes increase, the question of how to manage security at external borders becomes more pressing. There is a tension, which must be resolved, between quickly processing people who pose no risk and making sure that individuals of concern are identified accurately.

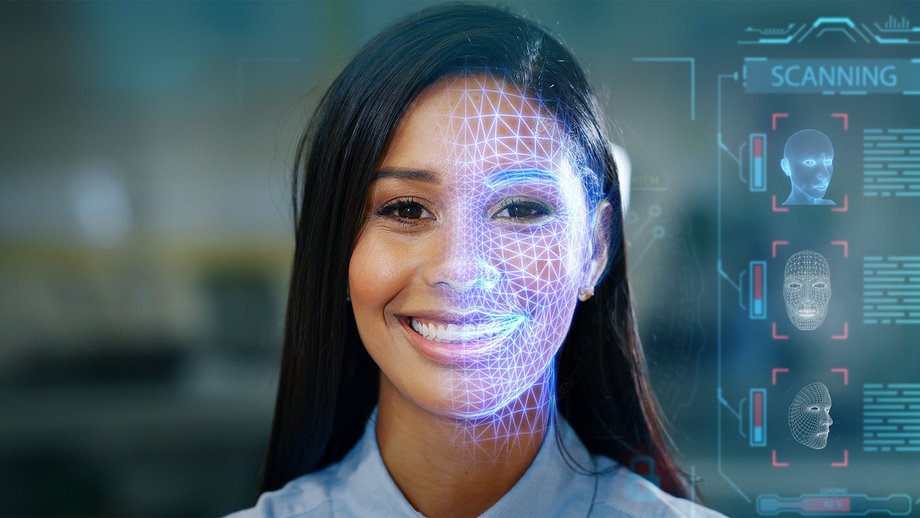

Developments in artificial intelligence (AI) systems mean that this technology has an important role to play in solving this problem. It has the ability to sift through large amounts of data that helps identify possibly risky patterns of behavior.

However, AI’s deployment in the public sphere brings challenges as well as benefits. In Europe, there is both a legal and a moral obligation to have some level of transparent decision-making, which means that the use of artificial intelligence technology comes with requirements around “explainability.”

Specifically, proposed regulations say that “high-risk AI systems shall be designed and developed in such a way as to ensure that their operation is sufficiently transparent to enable users to interpret the system’s output and use it appropriately.”1 The regulations set out a risk framework for AI, ranging from “minimal risk” for AI deployed in things like spam filters or video games, to “unacceptable risk” for AI that is designed to manipulate people’s free will. The use of AI in a border control setting is considered “high risk” and will be subject to strict obligations.