Increasing threats to the Trust Backbone

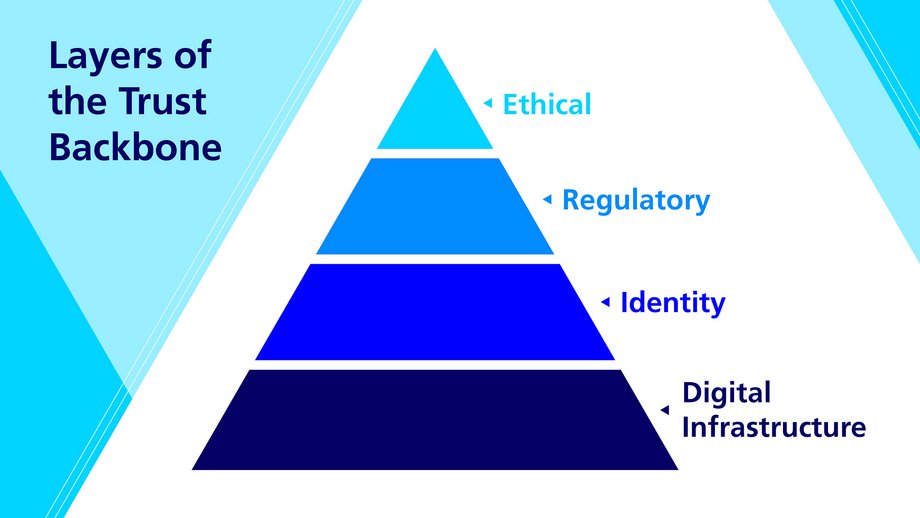

In an increasingly digitized world, the Trust Backbone is continuously being threatened at each of its layers: cyberattacks on end-devices and servers at the digital infrastructure layer, for instance, are constantly on the rise and accelerated during Covid-19 as remote-work became standard practice.

Transacting online presents a challenge for the validation and verification of the identities of transacting parties, resulting in many cases of fraudulent transactions, fake accounts, identity thefts, and impersonation, thereby threatening trust at the Identity Layer.

Our everyday digital transactions collect an enormous amount of data. The need for proper handling of such personal identifiable information has caused regulators to introduce a broad set of data protection rules, such as GDPR, which can also lead to significant fines for companies if not followed.

As another example, money laundering has been growing steadily over the years, with the financial authorities imposing ever higher penalties. As a consequence, the challenges of adhering to anti-money laundering controls, procedures, and sanction checks have risen significantly in importance. Moreover, it is to be expected that the regulatory requirements will continuously evolve, a situation that forces constant adaption at the Regulatory Layer of the Trust Backbone.

One example of a threat to the Ethical Layer can be the misuse of AI algorithms. If used for good, AI can improve areas like manufacturing or supply chain processes, or help in healthcare with cancer detection. If used negatively, though, AI can be trained to create “deepfakes” to spread, for example, fake news or lure unsuspecting end users into fraudulent situations. Another threat to the Ethical Layer is if AI does not function in its intended way: for example, situations in which biased decisions made by AI models lead to the discrimination of certain societal groups.